Discovery on a Grand Scale

Linking asteroids in NoirLab Source Catalog on ADAM::THOR

The Asteroid Institute has performed an asteroid discovery analysis of the NoirLab Source Catalog (NSC) Data Release 2. Using the THOR algorithm and computation resources provided by Google Cloud, the team has been able to identify over 27,500 asteroid candidates. THOR is uniquely able to discovery asteroids in datasets not traditionally designed for asteroid discovery. This work is a follow-up to the initial validation of THOR on a subset of NSC .

In the visualization above, you can see the orbits of our discovery candidate set, animated at 2 days per second. You can zoom and pan by scrolling or clicking and dragging within the visualization bounds. You may need to zoom out in order to see the full extent of the orbits. The majority of the discoveries are in the main belt, but there are also a number of NEOs and other exotica.

Preliminary Results

27,500

Total Discovery Candidates

~150

Near Earth Asteroids

100s

Jupiter Trojans

100s

Centaurs

10s

Trans-Neptunian Objects

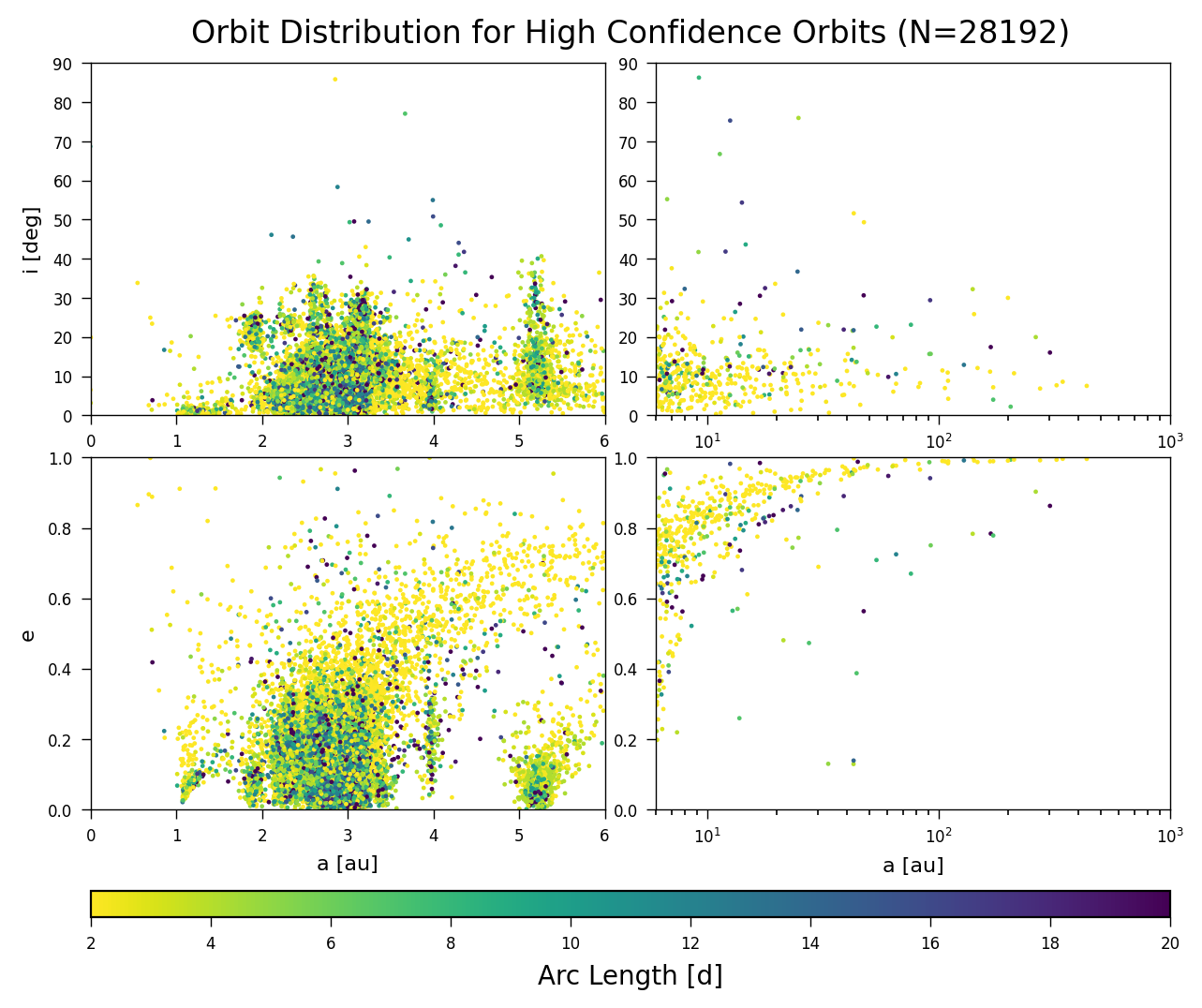

We are currently in the process of carefully reviewing the most interesting and outlying cases before submission to the Minor Planet Center, hence the approximate numbers given above. Our discovery candidates have generally high orbital uncertainties - in most cases, the observations comprising the orbit have an arc length of less than one week. Not included here are a handful of unclassified orbits with comet-like or otherwise outlandish orbits, which may or may not survive further scrutiny. Note that as the simulation above is a 2-body approximation, and orbits are only well-defined near their (usually short) discovery arc, the orbits as visualized likely diverge from their true orbit quite rapidly.

Dataset

The NOIRLab Source Catalog (NSC) is a catalog of nearly all of the public imaging data in NOIRLab’s Astro

Data Archive. These images from telescopes in both hemispheres nearly cover

the entire sky, but data are principally (~98%) from the Dark Energy Camera

(DECam) on the Blanco 4-meter telescope at Cerro Tololo Inter-American Observatory

(CTIO). The Asteroid Institute’s THOR run detailed here was performed on

the second data release (DR2), spanning 412,116 images taken from October

2012 through October 2019.

The NOIRLab Source Catalog (NSC) is a catalog of nearly all of the public imaging data in NOIRLab’s Astro

Data Archive. These images from telescopes in both hemispheres nearly cover

the entire sky, but data are principally (~98%) from the Dark Energy Camera

(DECam) on the Blanco 4-meter telescope at Cerro Tololo Inter-American Observatory

(CTIO). The Asteroid Institute’s THOR run detailed here was performed on

the second data release (DR2), spanning 412,116 images taken from October

2012 through October 2019.

Institutional collaborators had indicated that NSC would likely contain observations of many undiscovered asteroids, but the specifics of the dataset present some major challenges. Firstly, the data reduction pipeline did not include a difference imaging step, and so static sources are included. To complicate matters, the data are deep enough (limiting magnitude of 23.5 in some cases) that existing static source catalogs are of limited utility in ruling out sources via a positional crossmatch. This necessitated our writing a bespoke static source filtering regime that, while it did retain a small number of static sources, did reduce the point source database from 68bn points to a more manageable 1.7bn

Again complicating matters is the sheer depth of the survey. NSC has many deep stares, or repeat observations of crowded fields. While this is a domain in which THOR excels, the computational complexity, especially in clustering, can grow quite large. This is one of the key reasons we needed to employ such large scale compute in order to process the dataset in a reasonable timeframe. These computational realities heavily informed the compute architecture described below.

Analysis

1.7b

Point Sources Searched

8.5m

vCPU Hours Used

227,440

THOR Test Orbits

The analysis pipeline starts with data ingestion. NOIRLab Source Catalog (DR2) is one of many telescope catalogs that are ingested into a Unified Dataset, though it is the only dataset we used for this discovery run. These data are filtered and normalized to ideally contain only point source detections that represent likely moving sources. Those point sources stored in two formats: first in BigQuery for easy access and large scale analysis. The second destination is an indexed binary format, used as part of our Precovery service (Precovery DB).

The linking is performed by THOR (for more information on THOR, check out the repository) on thousands of simultaneous containers inside Google Kubernetes Engine. An orchestrator partitions out the data, generates the test orbits based on the input partition, and initializes a container for each test orbit. As each test orbit completes, it uploads results to a cloud storage bucket. This process is check-pointed and runs on spot instances to minimize costs.

The recovered orbits produced by THOR then go through an additional merge and extend process called Iterative Precovery and Orbit Determination (IPOD). This step removes duplicate orbits, and uses our Precovery service to extend the arcs of the orbits. The refined orbits are then uploaded to cloud storage.

The list of refined orbits represents the candidates discoveries, but due to the nature of this dataset, there are many false positives. Chiefly these false positives are cosmic ray hits which survived source extraction, and static sources that evaded our filters. To confirm our quality discovery candidates, millions of cutout images are fetched from NOIRLab and stored in cloud storage in order to perform validation. The images and detections in the discovery candidates are sent to a machine learning model that scores the individual detections. These results are sorted by those scores, and then validated by a human expert. Finally, discoveries are submitted to the Minor Planet Center for review.

Collaborations and Support

This work was made possible by the generous support of Google Cloud, which has helped both with computing resources as well as assistance in development of a machine learning model to reject false positives.

We are grateful to the team at NOIRLab for their responsiveness in requests for data access and support with our questions about the data and its intricacies.

We also thank the involvement of our institutional collaborators, especially those at the University of Washington, who have been supportive of the Asteroid Institute for many years.

We want to thank our team of volunteers who assisted with result vetting and candidate validation.

Finally we want to thank our generous supporters around the world. The Asteroid Institute is a program of the B612 Foundation, a non-profit organization, and B612 relies on the support of our donors. For more information on how you can get involved and support our work, please go here.